I think you could say with relative accuracy that there are three main bottlenecks in a computer: CPU, memory, and disk. There are some outliers that people might try to pile in: video card performance, or network throughput if you’re tweaking interrupts on your 10GigE card. But the basic three are pretty universal.

To cut to the chase: I hit disk bottlenecks sometimes, CPU bottlenecks almost never, and RAM bottlenecks all the time. And sometimes high load that looks to be on the CPU is really just I/O wait cycles. But RAM is special: if you have enough RAM, disk throughput becomes less important. At least, redundant disk I/O, which seems to account for a lot of it.

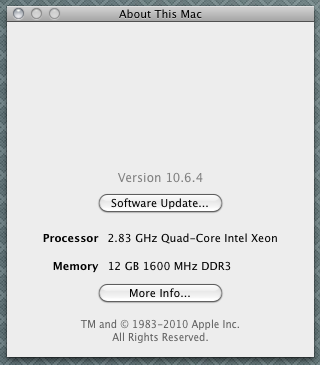

What interests me, though, is that almost everything is RAM starved in my opinion. My laptop has 2GB and I get near the limit fairly often. I’m thinking of trying to take it to 4GB. The jury’s out on whether or not it’ll see more than 3GB, and others complain that 3GB causes you to lose out a bit on speed.

But here’s the thing. I maintain things like a MySQL server with 32GB RAM. It’s not RAM-bound per se: we could switch to a machine with 1GB RAM and MySQL would still run fine. The memory is overwhelmingly configured for various forms of cache. But it’s not enough: there’s still a steady stream of disk activity, and a non-negligible number of queries that have to write temporary tables to disk.

RAM is cheap. It’d cost me about $50 to buy 4GB of RAM for my laptop. The reason RAM stops being cheap is that most motherboards don’t give you enough room. Both of my laptops can only take two DIMMS, which means I need dual 2GB sticks. They’re both based on older 32-bit chipsets, so I can’t exceed 4GB, but if I wanted to, I’d need dual 4GB sticks, and those are expensive. Even on decent servers, it’s hard to find many that give you more than 8 slots, making 32GB hard to exceed.

So what I’d really like to see someone bring to market is a 1U box with as many memory slots as it’s physically possible to fit in. 1U is still tall enough to have standard DIMMs standing up, and most of them are extremely deep. I bet you could fit 256 slots in. Then throw in a compact power supply, a standard LGA775 slot (allowing a quad-core chip), a good Gigabit NIC or four, and an optional FibreChannel card. No hard drives. Maybe a 4GB CompactFlash card if you really want it to have its own storage. Oh, and make sure the motherboard is pretty versatile in terms of RAM requirements and FSB. Oh, and don’t force me to go with ECC. If this were a single database server, it might be worth buying top-notch ECC RAM. But if this were just for caching things, I don’t care. Cache isn’t meant to be permanent, so an error is no big deal.

256 slots, and you could fill it with ultra-cheap 1GB DDR2 DIMMs. (Heck, at work, we have a bag of “useless” 1GB sticks that we pulled out.) You can get ’em for $10 a pop, meaning 256GB RAM would cost about $2,560. I suspect the system would command a high premium, but really, it’s just $2,560 worth of RAM and a $200 processor. A 2GB DIMM is about twice as much ($20/stick), but $5,000 for half a terabyte of RAM isn’t bad. Though 4GB DIMMs are still considerably more: they’re hard to find for under $100.

I think this would be a slam-dunk product. memcache is pretty popular, and it’s increasingly being used in previously unheard of roles, like a level 2 cache for MySQL. There are also a lot of machines that just need gobs of RAM, whether they’re database servers, virtual machine hosts, or application servers. And tell me a file server (sitting in front of a FibreChannel array) with 256GB RAM for caches and buffers wouldn’t be amazing.

So, someone, hurry up and make the thing. The key is to keep it fairly cheap. Cheaper than buying 4GB DIMMs, at least.