I’m not sure yet if this is a good idea or not, nor if I want to pay for it long-term, but I’m playing with an experiment that I think is kind of neat. (But maybe I’m biased.)

Background

For a long time now, I’ve been using CloudFront to serve static assets (images, CSS, JS, etc.) on the blogs, and a few other sites I host. That content never changes, so I can offload it to a global Content Delivery Network. IMHO it’s win-win: visitors get a faster experience because most of those assets come from a server near them (they have a pretty extensive network), and I get my server alleviated from serving most images, so it’s only handling request for the blog pages themselves.

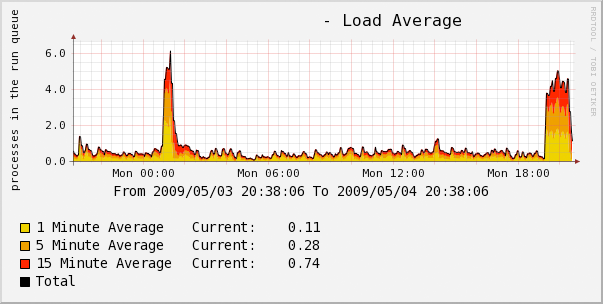

Except, serving images is easy; they’re just static files, and I’ve got plenty of bandwidth. What’s hard is serving blog pages: they’re dynamically-generated, and involve database queries, parsing templates, and so forth… Easily 100ms or more, even when things go well. What I’d really like is to cache those files, and just remove them from cache when something changes. And I’ve actually had that in place for a while now, using varnish in front of the blogs. It’s worked very well; more than 90% of visits are served out of cache. (And a decent bit of the 10% that miss are things that can’t be cached, like form POSTs.) It alleviates backend load, and makes the site much faster when cache hits occur, which is most of the time.

But doing this requires a lot of control over the cache, because I need to be able to quickly invalidate the cache. CloudFront doesn’t make that easy, and they also don’t support IPv6. What I really wanted to do was run varnish on multiple servers around the world myself. But directing people to the closest server isn’t easy. Or, at least, that’s what I thought.

Amazon’s Latency-Based Routing

Amazon (more specifically, AWS) has supported latency-based routing for a while now. If you run instances in, say, Amazon’s Virginia/DC (us-east-1) region and in their Ireland (eu-west-1) data centers, you can set up LBR records for a domain to point to both, and they’ll answer DNS queries for whichever IP address is closer to the user (well, the user’s DNS server).

It turns out that, although the latency is in reference to AWS data centers, your IPs don’t actually have to point to data centers.

So I set up the following:

- NYC (at Digital Ocean), mapped to us-east-1 (AWS’s DC/Virginia region)

- Frankfurt, Germany (at Vultr), mapped to eu-central-1 (AWS’s Frankfurt region)

- Los Angeles (at Vultr), mapped to us-west-1 (AWS’s “N. California” region)

- Singapore (at Digital Ocean), mapped to ap-southeast-1 (AWS’s Singapore region)

The locations aren’t quite 1:1, but what I realized is that it doesn’t actually matter. Los Angeles isn’t exactly Northern California, but the latency is insignificant—and the alternative was all the traffic going to Boston, so it’s a major improvement.

Doing this in DNS isn’t perfect, either: if you are in Japan and use a DNS server in Kansas, you’re going to get records as if you’re in Kansas. But that’s insane and you shouldn’t do it, but again, it doesn’t entirely matter. You’re generally going to get routed to the closest location, and when you don’t, it’s not really a big deal. Worst case, you see perhaps 300ms latency.

Purging

It turns out that there’s a Multi-Varnish HTTP Purge plugin, which seems to work. The downside is that it’s slow: not because of anything wrong with the plugin, but because, whenever a page changes, WordPress now has to make connections to four servers across the planet.

I want to hack together a little API to accept purge requests and return immediately, and then execute them in the background, and in parallel. (And why not log the time it takes to return in statsd?)

Debugging, and future ideas

I added a little bit of VCL so that /EDGE_LOCATION will return a page showing your current location.

I think I’m going to leave things this way for a while and see how things go. I don’t really need a global CDN in front of my site, but with Vultr and Digital Ocean both having instances in the $5-10 range, it’s fairly cheap to experiment with for a while.

Ideally, I’d like to do a few things:

- Enable HTTP/2 support, so everything can be handled in one request. Doing so has some great reviews.

- Play around with keeping backend connections open from the edge nodes to my backend server, to speed up requests that miss cache.

- Play around with something like wanproxy to try to de-dupe/speed up traffic between edge nodes and my backend server.

Update

I just tried Pingdom’s Full Page Test tool, after making sure the Germany edge node had this post in cache.

Page loaded in 291ms: after fetching all images and stylesheets. I’ll take that anywhere! But for my site, loaded in Stockholm? I’m really pleased with that.