From my “Random ideas I wish I had the resources to try out…” file…

The way the “pretty big” sites work is that they have a cluster of servers… A few are database servers, many are webservers, and a few are front-end caches. The theory is that the webservers do the ‘heavy lifting’ to generate a page… But many pages, such as the main page of the news, Wikipedia, or even these blogs, don’t need to be generated every time. The main page only updates every now and then. So you have a caching server, which basically handles all of the connections. If the page is in cache (and still valid), it’s served right then and there. If the page isn’t in cache, it will get the page from the backend servers and serve it up, and then add it to the cache.

The way the “really big” sites work is that they have many data centers across the country and your browser hits the closest one. This enhances load times and adds in redundancy (data centers do periodically go offline: The Planet did it just last week when a transformer inside blew up and the fire marshalls made them shut down all the generators.). Depending on whether they’re filthy rich or not, they’ll either use GeoIP-based DNS, or have elaborate routing going on. Many companies offer these services, by the way. It’s called CDN, or a Contribution Distribution Network. Akamai is the most obvious one, though you’ve probably used LimeLight before, too, along with some other less-prominent ones.

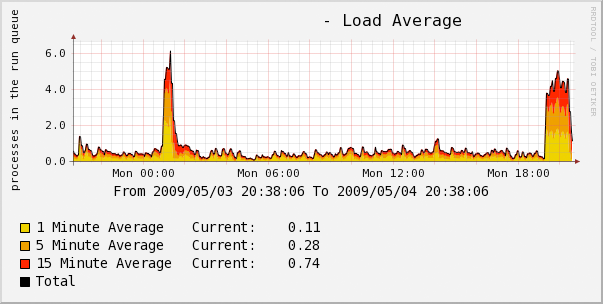

I’ve been toying with SilverStripe a bit, which is very spiffy, but it has one fatal flaw in my mind: its out-of-box performance is atrocious. I was testing it in a VPS I haven’t used before, so I don’t have a good frame of reference, but I got between 4 and 6 pages/second under benchmarking. That was after I turned on MySQL query caching and installed APC. Of course, I was using SilverStripe to build pages that would probably stay unchanged for weeks at a time. The 4-6 pages/second is similar to how WordPress behaved before I worked on optimizing it. For what it’s worth, static content (that is, stuff that doesn’t require talking to databases and running code) can handle 300-1000 pages/second on my server as some benchmarks I did demonstrated.

There were two main ways to enhance SilverStripe’s performance that I thought of. (Well, a third option, too: realize that no one will visit my SilverStripe site and leave it as-is. But that’s no fun.) The first is to ‘fix’ Silverstripe itself. With WordPress, I tweaked MySQL and set up APC (which gave a bigger boost than with SilverStripe, but still not a huge gain). But then I ended up coding the main page from scratch, and it uses memcache to store the generated page in RAM for a period of time. Instantly, benchmarking showed that I could handle hundreds of pages a second on the meager hardware I’m hosted on. (Soon to change…)

The other option, and one that may actually be preferable, is to just run the software normally, but stick it behind a cache. This might not be an instant fix, as I’m guessing the generated pages are tagged to not allow caching, but that can be fixed. (Aside: people seem to love setting huge expiry times for cached data, like having it cached for an hour. The main page here caches data for 30 seconds, which means that, worst case, the backend would be handling two pages a minute. Although if there were a network involved, I might bump it up or add a way to selectively purge pages from the cache.) squid is the most commonly-used one, but I’ve also heard interesting things about varnish, which was tailor-made for this purpose and is supposed to be a lot more efficient. There’s also pound, which seems interesting, but doesn’t cache on its own. varnish doesn’t yet support gzip compression of pages, which I think would be a major boost in throughput. (Although at the cost of server resources, of course… Unless you could get it working with a hardware gzip card!)

But then I started thinking… That caching frontend doesn’t have to be local! Pick up a machine in another data center as a ‘reverse proxy’ for your site. Viewers hit that, and it will keep an updated page in its cache. Pick a server up when someone’s having a sale and set it up.

But then, you can take it one step further, and pick up boxes to act as your caches in multiple data centers. One on the East Coast, one in the South, one on the West Coast, and one in Europe. (Or whatever your needs call for.) Use PowerDNS with GeoIP to direct viewers to the closest cache. (Indeed, this is what Wikipedia does: they have servers in Florida, the Netherlands, and Korea… DNS hands out the closest server based on where your IP is registered.) You can also keep DNS records with a fairly short TTL, so if one of the cache servers goes offline, you can just pull it from the pool and it’ll stop receiving traffic. You can also use the cache nodes themselves as DNS servers, to help make sure DNS is highly redundant.

It seems to me that it’d be a fairly promising idea, although I think there are some potential kinks you’d have to work out. (Given that you’ll probably have 20-100ms latency in retreiving cache misses, do you set a longer cache duration? But then, do you have to wait an hour for your urgent change to get pushed out? Can you flush only one item from the cache? What about uncacheable content, such as when users have to log in? How do you monitor many nodes to make sure they’re serving the right data? Will ISPs obey your DNS’s TTL records? Most of these things have obvious solutions, really, but the point is that it’s not an off-the-shelf solution, but something you’d have to mold to fit your exact setup.)

Aside: I’d like to put nginx, lighttpd, and Apache in a face-off. I’m reading good things about nginx.