This morning we thought the Internet was down in our office. Many sites we use constantly didn’t load, and many others were extremely slow to load, including our own site. We knew, though, that our site was in tip-top shape, which had us baffled. Routing problem on our ISP’s end?

Nope, just Google being down. I searched for something and the results timed out. “Internet down for anyone else?” “Yeah, I can’t get into my GMail.” “I was just trying to check Google Analytics, and that’s not working either.” “YouTube, too!” “Our site’s loading really slow, too. Must be our pipe.”

It turns out that a ridiculous amount of sites on the Internet are using Google Analytics, including my employer and my own site. (And just this afternoon I fixed the tags on the blogs so stats will track properly.)

When my site goes offline, it’s not surprising. My host has to shut down periodically when they discover frayed wiring in the data center. If my employer went offline, it would be a bigger deal, and it’s something I could envision: our data center getting knocked offline, or me goofing the config on our production router and writing the bad changes out to all of them. But Google going down? That’s unheard of.

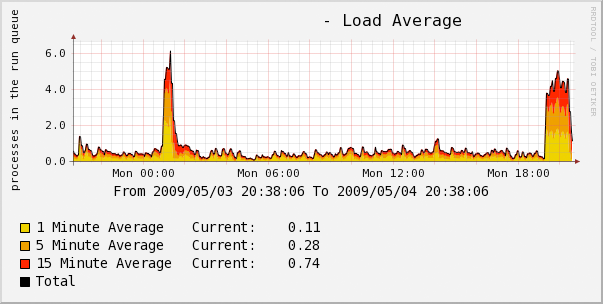

Arbor Networks seems to have monitoring equipment all over the place, which means that they have some pretty good insight into things. As they posted about today, Google apparently fudged a route and it propagated out, routing most of their traffic through some low-level provider in Asia. The graph, to me, is the coolest part. When have you ever seen a graph reflecting a dropoff of many gigabits per second in a really short period? It’s orders of magnitudes more than anyone is used to.

Of course, times like this make me want to learn more about BGP4, the core routing protocol used on the Internet. Anyone remember when Youtube went down last year, and it turns out that it was some country (Pakistan?) trying to block Youtube, but they did so by advertising a bogus route internally, and a series of misconfigured routers allowed them to advertise that route, and soon it had propagated across pretty much everywhere?